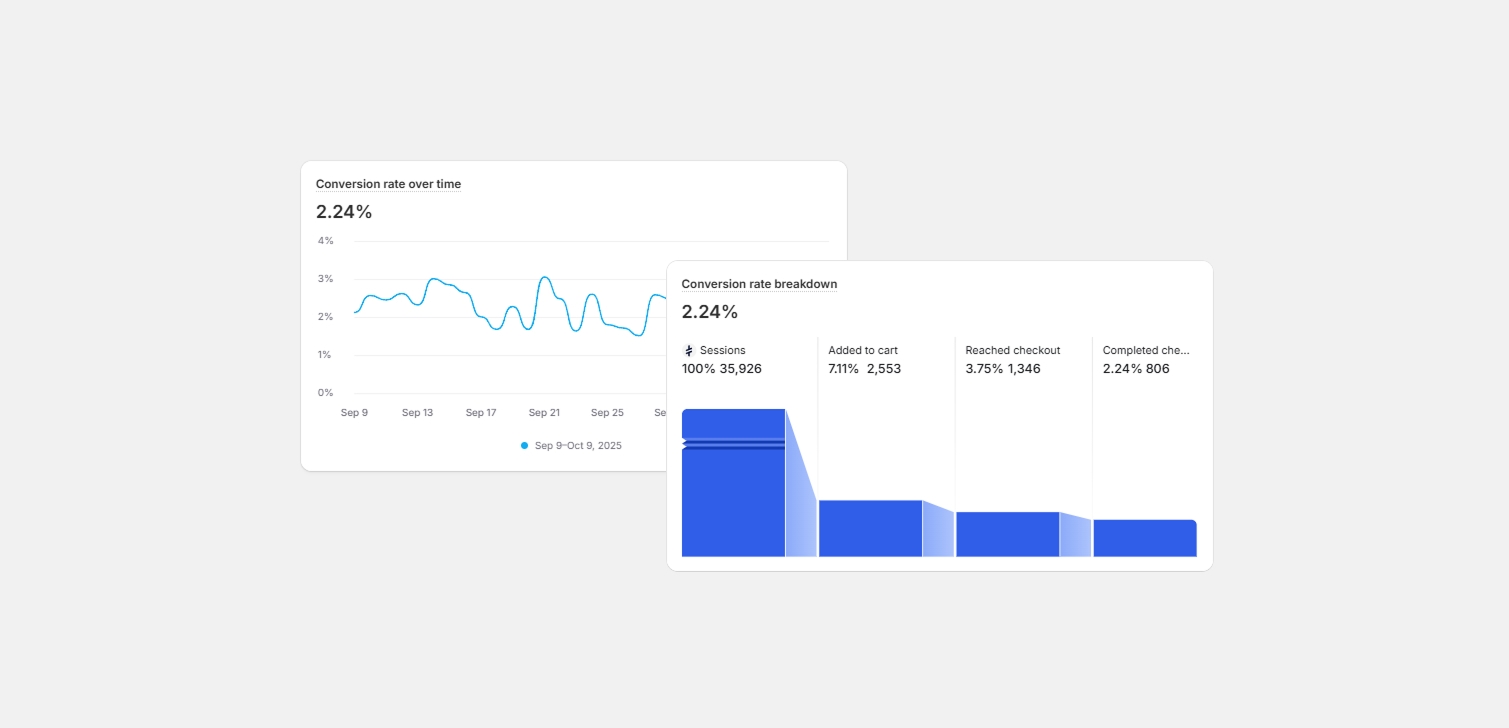

Why your conversion rate doesn’t always reflect your website’s real performance

10/8/2025 · 3 minute read

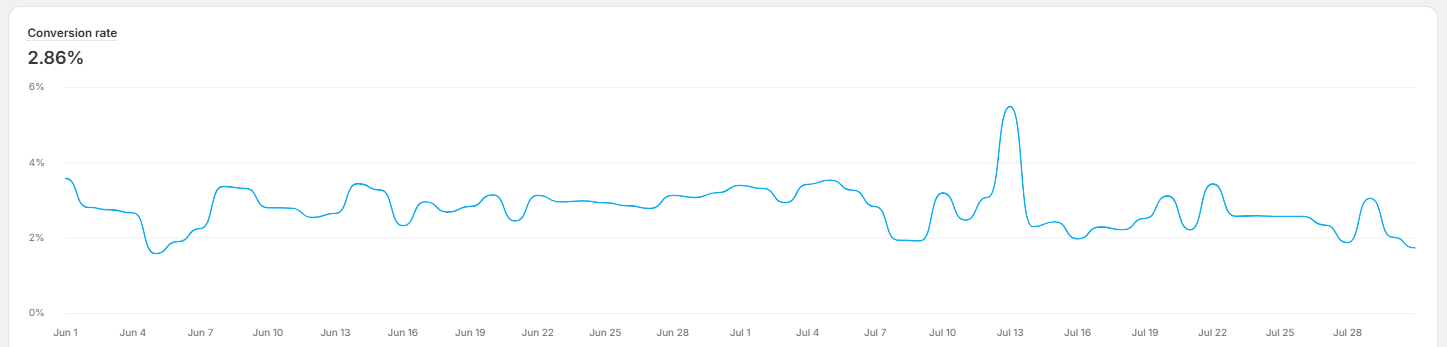

If your store’s conversion rate drops, it’s easy to assume something’s wrong with the site.

But that number can be misleading. Conversion rate is influenced by many factors outside your website - especially your marketing messages.

Let’s unpack how this happens, why it matters, and what you can do to measure performance more accurately.

When “good” traffic makes your conversion rate look worse

Imagine your website sells high-end kitchen appliances. Your site design is solid, your checkout works smoothly, and your product pages are clear.

Now you change your Facebook ads from “Best in the market” to “Best value for money in the market.”

You’ve just shifted your audience.

The new ad attracts more clicks, especially from people searching for deals. That means more traffic - great, right? But when those visitors land on your site and see premium prices, their expectations aren’t met. They bounce quickly or browse without buying.

Your traffic went up. Your conversion rate went down.

Did your website suddenly become worse? Of course not. The type of traffic changed, not the site itself.

How external factors shape your website’s metrics

Conversion rate depends on more than your website’s usability or copy. Here are a few things that can distort the numbers:

Ad messaging – Changing ad language or targeting affects who visits and what they expect.

Seasonality – Holiday campaigns, sales, or product launches bring different audiences at different times.

Referral sources – Visitors from an organic blog post behave differently than those from a discount ad.

Pricing updates – Even a small increase can impact perceived value, especially if ads or old links still show the old price.

This is why comparing conversion rates week to week (or month to month) can be misleading. You’re not always comparing apples to apples.

Why split testing is the only reliable way to measure changes

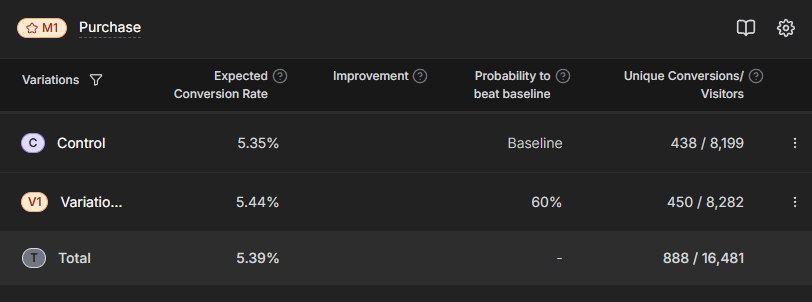

If you want to know whether a site change actually improves conversions, you need data that’s statistically valid. That means running controlled experiments.

A proper A/B test isolates one variable while keeping everything else the same. For example:

Version A: your current homepage.

Version B: same homepage, but with updated product descriptions.

You split your traffic randomly between both versions and measure results. Once you reach enough visitors, you can see if the difference is real or just random noise.

Without this kind of test, any drop or increase in conversion rate could be caused by outside influences - ad targeting, audience shifts, or even the day of the week.

Quick FAQ

Q: My conversion rate dropped. What should I do? The first thing you should look at, is your traffic composition. Use Shopify Analytics to find out if traffic has changed during this period. For example, less search traffic and more social may lead to a lower conversion rate.

Q: What’s a “good” conversion rate for Shopify? There’s no universal number. Benchmarks vary by product, price, and audience. Focus on trends and test results instead of comparing to others.

Q: Is split testing possible for all stores? As a rule of thumb, and when testing conversions, it's good to have at least 1000 orders per month. It's still possible to split test below that threshold. For example, extend the experiment time - but the time it takes to reach statistical significance weakens the result.

Wrapping up

Conversion rates are useful, but they’re not the whole story. A higher or lower number doesn’t always mean your website improved or worsened.

Think of it like a temperature reading - accurate for what it measures, but not a diagnosis. To truly understand performance, look at your traffic sources, audience expectations, and most importantly, run controlled tests before drawing conclusions.

That’s the only way to know what’s really working, and what’s just noise.